Bridging the gap between simulation and real-world applications, often referred to as the “reality gap,” is a significant challenge in fields like robotics, autonomous driving, and industrial automation. While simulations offer a controlled environment for developing and testing systems, discrepancies between simulated models and real-world conditions can lead to performance issues when these systems are deployed.

Key Challenges in Bridging the Simulation-to-Reality Gap:

1. Modeling Inaccuracies:

a. Physical Dynamics

Simulations often struggle to accurately replicate complex physical interactions, such as contact dynamics in robotic manipulation. These inaccuracies can result in behaviors that perform well in simulation but fail in real-world scenarios. Some key factors include:

- Differences in friction, material elasticity, and deformability.

- Simplified physics engines that do not account for micro-level interactions.

- Limitations in simulating fluid dynamics and aerodynamics in soft robotics.

b. Environmental Factors

Variations in environmental conditions like lighting, texture, and material properties are difficult to model precisely, leading to a gap between simulated and actual sensor data. Some examples include:

- Autonomous vehicles performing well in simulations but struggling with glare, rain, or fog in real-world driving.

- Industrial robots failing to grasp objects due to unseen variations in texture or surface reflectivity.

2. Sensor and Perception Discrepancies:

a. Visual Perception

Simulated environments may not capture the full variability and noise present in real-world sensor data, causing perception algorithms to misinterpret or overlook critical information during deployment. Examples include:

- Cameras in simulations provide perfect depth estimation, while real-world cameras have lens distortions and motion blur.

- Simulated LiDAR produces ideal point clouds, whereas real LiDAR suffers from reflections and occlusions.

b. Sensor Noise

Real sensors are subject to noise and imperfections that are often underestimated or omitted in simulations, affecting the reliability of sensor-dependent systems. For example:

- Simulated IMU (Inertial Measurement Unit) readings being noise-free, while real IMUs experience drift and temperature variations.

- GPS simulations provide precise positioning, while real GPS signals can be obstructed by buildings or interference.

3. Actuation Differences:

a. Mechanical Variations

Differences in actuator performance, such as friction and backlash, can lead to deviations between simulated and actual movements, impacting tasks that require precision. Some issues include:

- The same control commands produce different joint angles in a real robot compared to simulation.

- Wear and tear affects the performance of actuators over time, which is not modeled in simulation.

b. Latency Issues

Simulations may not account for communication delays and processing times inherent in real-world systems, affecting the timing and coordination of actions. Examples:

- A robotic arm in simulation responds instantaneously, whereas real-world actuators have response delays.

- Network latency affecting multi-robot coordination in warehouse automation.

4. Simplified Assumptions:

a. Overgeneralization

To make simulations computationally feasible, simplifications are made that may overlook edge cases or rare events, which can be critical in real-world applications. Examples:

- Simulated autonomous cars trained only in ideal traffic conditions struggling in real-world dense urban environments.

- Warehouse robots trained in static environments failing to adapt to dynamic human interactions.

b. Behavioral Modeling

Human behaviors and interactions are complex and often oversimplified in simulations, leading to unexpected outcomes when systems are deployed in human-centric environments. Issues include:

- Social navigation models in robots failing to predict real human movement patterns.

- Customer service robots struggling with ambiguous or non-standard human communication.

Strategies to Mitigate the Reality Gap:

1. Domain Randomization:

This technique involves exposing the model to a wide range of simulated variations during training, such as altering textures, lighting, and object positions. By encountering diverse scenarios, the model learns to generalize better to unforeseen real-world conditions. Examples include:

- OpenAI’s robotic hand trained in simulation using randomized physics parameters to successfully manipulate objects in the real world.

- Autonomous cars trained with varying weather conditions, different vehicle models, and randomized pedestrian behaviors.

2. Simulator Calibration and Tuning:

Adjusting simulation parameters to closely match real-world observations can enhance accuracy. Methods like Monte Carlo simulations can identify and correct points of divergence between simulated and actual behaviors. Some approaches include:

- Using real-world sensor data to tune simulated sensor noise models.

- Continuously updating physics models based on real-world test results.

3. Hybrid Simulation-Real Data Training:

Combining simulated data with real-world data for training can improve model robustness. Techniques such as domain adaptation help models adjust to discrepancies between simulated and actual data distributions. Examples:

- Using reinforcement learning with both simulated and real-world experiences to train robotic grasping systems.

- Training computer vision models on synthetic images and fine-tuning them with real-world data.

4. Dynamic Compliance Tuning:

Incorporating adaptive control strategies that adjust to real-time feedback can help systems accommodate unmodeled dynamics and uncertainties encountered in real-world operations. Some techniques include:

- Model Predictive Control (MPC) to adjust robot movements dynamically based on real sensor feedback.

- Learning-based controllers that adapt parameters in real-time to reduce errors caused by unmodeled forces.

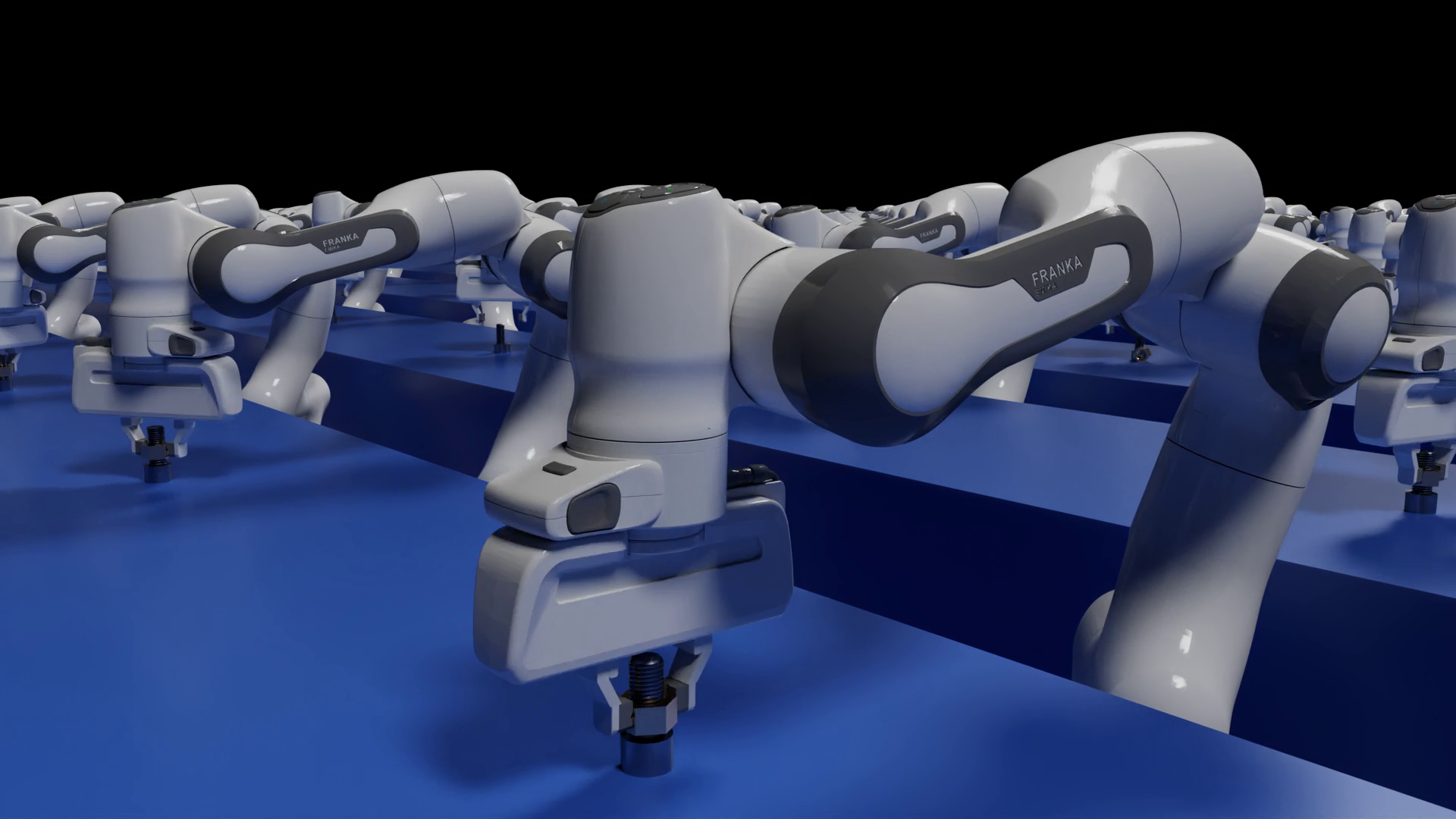

Tools and Frameworks for Robotic Simulation:

Several simulation platforms are used to address these challenges:

- NVIDIA Isaac Sim: A high-fidelity physics-based simulator optimized for robotics, integrating reinforcement learning and synthetic data generation for training AI models.

- Gazebo: An open-source robotic simulator widely used in academia and industry for ROS-based development.

- MuJoCo: A physics engine optimized for continuous control tasks, often used for reinforcement learning.

- Unity and Unreal Engine: Used for realistic visual simulations, synthetic data generation, and testing autonomous systems.

NVIDIA Isaac Sim and Isaac Lab:

NVIDIA Isaac Sim is designed specifically for robotics research, enabling high-fidelity simulation of robots with realistic physics, sensor models, and reinforcement learning capabilities. It includes:

- GPU-accelerated physics for real-time and batch simulations.

- Support for ROS and ROS 2 for easy integration with real-world robotic systems.

- Built-in synthetic data generation pipelines for training AI models.

Isaac Lab extends Isaac Sim by providing:

- Reinforcement learning environments optimized for robotic control.

- Pre-built benchmarks for training and evaluating robotic policies.

- Tight integration with NVIDIA Omniverse, enabling collaboration and data sharing across teams.